Featured Post

April

24,

2024

April

24,

2024

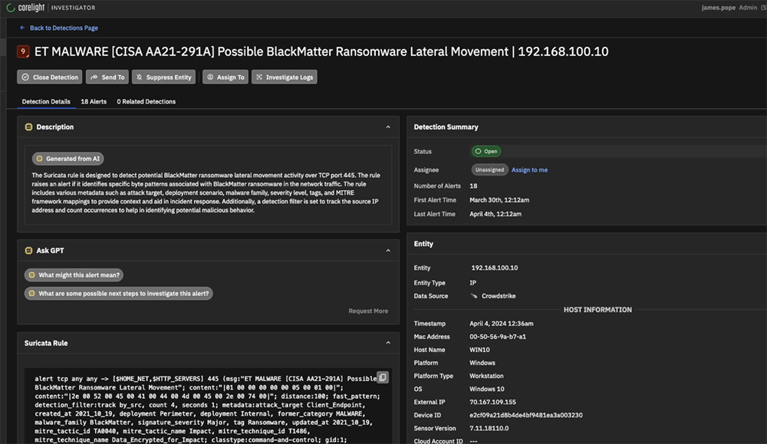

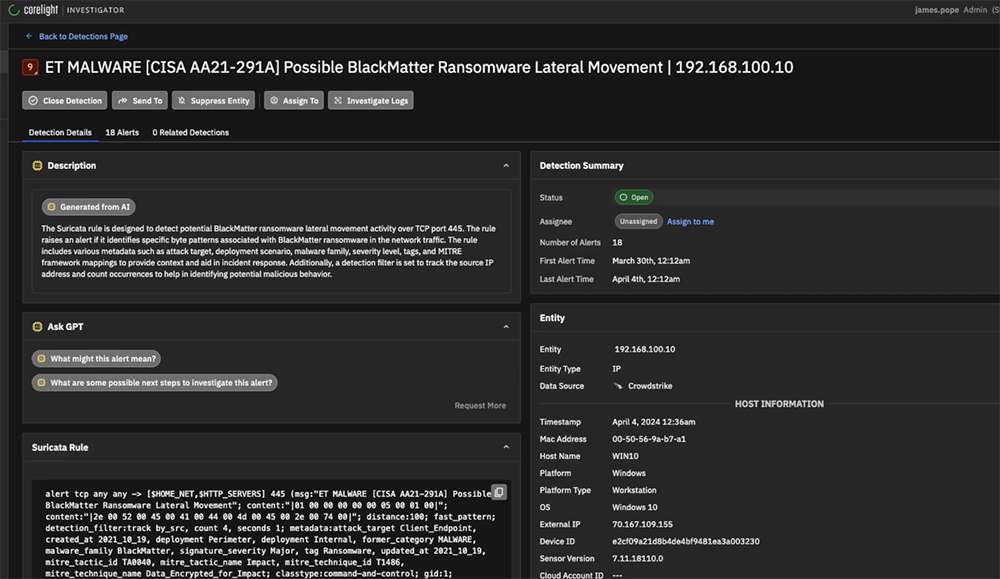

In the ever-evolving landscape of cybersecurity threats, staying ahead requires more than just detection; it demands comprehensive correlation and analysis for informed decision-making. Understanding the context surrounding an alert is important to effectively mitigate risk. That's why we're thrilled to announce the integration of CrowdStrike... Read more »